Last Updated on June 15, 2023 by Tim Wells

I still have a 720p television.

There. I said it.

And since the average lifespan of a TV is between 5-10 years, I’ll bet I’m not the only one.

It’s not that my TV is dying. It’s a Samsung and still looks great, actually. But it’s 720p, and that automatically makes it obsolete, right?

Or…does it?

This got me wondering what the real difference between 720p vs. 1080p TVs is and at what point you can see the difference.

So in this article, I’m going to explain the difference between 720p and 1080p (and 1080i). Then I will explain why (and when) it makes a difference. Then, you can decide if you need to upgrade now or whether that old 720p TV of yours still has some life left in it.

I’ve also done this same analysis for the difference between 1080p TVs and 4K TVs. You can check out that article here.

Let’s dive in!

How Is TV Resolution Measured?

When we talk about TVs and computer monitors’ picture quality, we refer to their resolution. That’s the number of pixels on the screen. A pixel is a liquid crystal illuminated by a device behind the TV screen to show different colors. If the screen size remains constant, a higher resolution number means a sharper display.

But instead of talking about the total number of pixels, we shorten that to the number of pixels on one axis (vertically or horizontally).

Here’s an example:

The full resolution of a 720p TV is 1280 pixels wide by 720 pixels high on the screen. We multiply the two numbers together to get the total number of pixels on the screen.

720p = 1280 pixels wide x 720 pixels high = 921,600 total pixels.

Since it doesn’t really roll off the tongue to say we have a 921K TV, the TV company marketing departments decided to shorten that to the number of pixels on the vertical axis: 720.

Progressive vs. Interlaced: What Do The ‘p’ & ‘i’ Mean?

Tacked on the end of that resolution number is either a ‘p’ or ‘i’, which stands for either Progressive or Interlaced.

This refers to how the image is drawn on the screen. In general, progressive scan (p) will give you a smoother picture than interlaced picture at the same resolution.

But why?

How Interlaced Video (i) Works

Interlaced video was the first attempt at increasing the resolutions on your TV screen.

It worked by drawing every other line of an image on the screen in a single frame. Then, on the next frame, it would draw the opposite lines. If you looked at each frame individually, it would only ever show half of the image. But, if you play them quickly enough, it would trick our eyes into thinking there’s one complete image.

For the most part, this worked well. The problem was that the two frames weren’t the same image. So for fast-moving images, like sports, you’d see strange artifacts where the images didn’t align correctly.

Still, interlaced video was a great way to increase resolution without increasing the video’s bandwidth.

By contrast, progressive scan will draw the entire image, line by line, on every frame. That means motion is more fluid, and there are no weird artifacts when the image’s subject is moving quickly. Action movies and sports look much better in progressive scans.

Unfortunately, that comes at a cost.

Progressive scanning takes up a lot more bandwidth than interlaced images. This is what drove the adoption of better and better cables over the years.

What Is HD TV Resolution?

Now that you understand how to read a resolution spec let’s look at an easy question that has a rather complicated answer:

What is HD resolution?

In the old days, TV signals were 480i in North America (NTSC) or 576i in Europe (PAL). So when HD came out, it was defined as ‘anything higher than Standard Definition.’

Predictably, manufacturers had different ideas of what that really meant, and three different HDTV resolutions emerged.

720p was the clear winner early on, so manufacturers called it High-Definition, or HDTV. But when 1080p became more cost-effective, suddenly, there were two competing HD formats.

1080i (interlaced) was short-lived, at least for consumer televisions. However, 1080p, which was known as “Full HD” (FHD) at the time, quickly became the dominant HD television resolution.

One final note: based on the “official” definition of High-Definition, even 4K and 8K TVs would classify as HDTVs. While technically accurate, TV manufacturers found a winner by calling them “4K TVs”, so that’s how they’re marketed.

Putting aside the marketing labels, the best way to compare 720p and 1080p is to look at their pixel count.

We’ll do that in the next section.

A Quick Word About 1080i

1080i was one of the early competing television formats. To many, it seemed the best of both worlds.

The resolution was higher than the standard 720p resolution that was becoming popular. However, 1080i used the same amount of bandwidth as the lower-resolution, progressive scanning video.

However, because it was interlaced, it also had some of the same problems, like mismatched video and jaggies where there should be smooth curves.

For a brief time, the industry considered 1080i to be better than 720p. After further testing, the two resolutions are essentially the same.

Deinterlaced resolution offers smoother images by default, so a 1080p image will look better than a similar 1080i image. Additionally, many TVs and streaming devices automatically convert interlaced signals into progressive scanned signals.

So, if a 1080i signal is fed into a 720p TV, it will be deinterlaced and down-converted to 720p. If fed into a 1080p TV, the same thing will happen. Only this time, there’s no change in resolution.

The final display will be 1080p.

Difference Between 720p vs 1080p: Pixel Count

In a previous section, I gave you a simple calculation to determine how many pixels are on your TV screen.

That calculation is:

Number of Pixels High x Number of Pixels Wide = Total Number of Pixels

Pretty simple, eh?

The more pixels on your screen, the sharper the image will appear to the human eye (up to a point).

So, let’s find out how the pixel counts compare between a 720p television and a 1080p television. Let’s start with 720p.

As I said earlier, a 720p TV is 1280 pixels wide and 720 pixels high. Multiplying the two together gets a total of 921,600 total pixels.

720p = 1280 pixels wide x 720 pixels high = 921,600 total pixels.

That sounds like a lot. Compared to the 307,200 pixels in a 480p television, it was! However, 1080p resolution made another massive leap in how many pixels manufacturers could squeeze onto a TV panel.

1080p TVs are 1920 pixels wide and 1080 pixels high. So that leads us to this:

1080p = 1920 pixels wide x 1080 pixels high = 2,073,600 total pixels.

That’s more than double the number of pixels in a 720p television and almost six times that in a 480p, standard-definition television.

Can You Tell the Difference Between 720p & 1080p?

Here’s another easy question with a complicated answer: Can you tell the difference between 720p and 1080p?

That depends on how big your television is and how far away you’re sitting.

To illustrate, let’s use the example of my 43″ Samsung TV. I have it in my upstairs den, and our couch is about six feet from the TV.

Manufacturers recommend placing your television about three times the vertical screen height away from your sitting position. For my TV, that’s about 5.5 feet away. It’s slightly far away, given the size, but not too bad.

Could I see a difference if I upgraded to a 1080p TV at that distance?

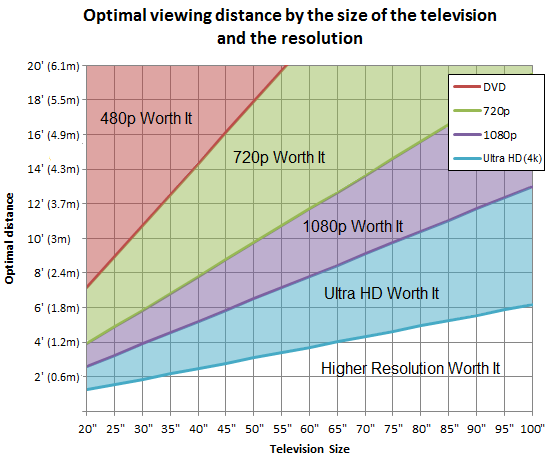

To find out, let’s look at this chart from Rtings.com.

This chart has TV sizes along the bottom and the distance from your TV going up the left-hand side. To read the chart, find the size of your TV, and go up until you get to how far away it is.

I’ll use the 45″ line since it’s the closest to my TV size.

This chart tells me that I could put my TV up to about 9′ away from my couch and still be able to see the difference between 1080p and 720p.

It also tells me that I should NOT upgrade to a 4K TV because my six-foot distance is on the border where 1080p and Ultra HD (4K) look about the same.

720p vs 1080p: Content

But even if your TV is the right size and viewing distance away, you might not need to upgrade.

That’s because most TV stations in the United States broadcast almost exclusively in either 720p or 1080i. So if all you use your old TV for is broadcast TV, it’s probably not worth upgrading.

On the flip side, 1080p resolution is the current standard for most streaming services and Blu-ray movies.

Although you can also get some 4K content from most streaming services and a handful of 4K UHD Blu-ray discs. But comparing 4K to 1080p is a whole other conversation.

How Much Better is 1080p Than 720p? When Should You Upgrade?

There is little difference in resolution quality between 720p and 1080p when the screen is smaller than 50 inches.

The smaller screen size compensates for the fewer pixels in 720p. The differences are harder to see farther than 2m away from a 50-inch TV screen.

Sometimes you may even notice a 720p screen seeming “better” than a 1080p screen; the reason is usually related to the bandwidth.

The simplest explanation is that the 720p needs to be less compressed on the monitor since it has fewer pixels than the 1080p resolution.

How Much Are 720p And 1080p Display TVs?

1080p HD TVs are generally more expensive than 720p HD display TVs.

The price varies depending on factors such as brand, display technology, features, and specialized capabilities.

If this is your first time buying a digital HD TV, then there is no problem starting with an entry-level 720p HD TV. This TV will still give you a high-definition experience, especially if you choose a screen less than 50 inches. This will give you the feel of HD TVs, and you will see whether you need to upgrade or not.

Most times, if it’s just for general use, then the 720p option will be perfect.

Getting value for money is a priority if you are on a budget. You may also want to save money due to the current economic crisis in many parts of the world. Saving is a wiser choice for most people.

However, if you want to upgrade your current digital TV, 1080p is the best option. Y

ou can also enjoy a bigger screen with this option. This is also the best TV to get if you plan to use it as a giant computer monitor.

Just make sure not to set it at 1920×1080 resolution because the icons and text will become too small.

The Verdict

There’s no doubt that 1080p is better than 720p, but the real question is whether upgrading your old 720p TV is worth it.

Depending on the size of the TV, how far away you sit when watching it, and what content you watch on it, you may not see much of a benefit to upgrading.

The most important thing is understanding what all the specifications you encounter mean and knowing what your needs are.

I’m not going to tell you that upgrading your TV is a bad decision, but it might not be the best bang for your buck.

- How to Install Kodi Diggz Xenon Plus & Free99 Build [March 2024] - March 3, 2024

- How to Enable Unknown Sources on Chromecast with Google TV [2024] - October 30, 2023

- How to Install the Seren Kodi Addon [2024] - October 29, 2023